Jun-Peng Jiang @ LAMDA, NJU-AI

|

蒋俊鹏 |

Short Biography

I received my B.Sc. degree from Soochow University, in June 2021. In the same year, I was admitted to study for a Ph.D. degree in Nanjing University without entrance examination in the LAMDA Group led by professor Zhi-Hua Zhou, under the supervision of Professor De-Chuan Zhan. and Professor Han-Jia Ye.

Research Interests

My research interest includes Machine Learning and Data Mining, mianly foucus on multimodal tabular data learning and its real-world applications:

Tabular Data Learning

Multimodal Large Language Model

Tabular Understanding

Preprints

|

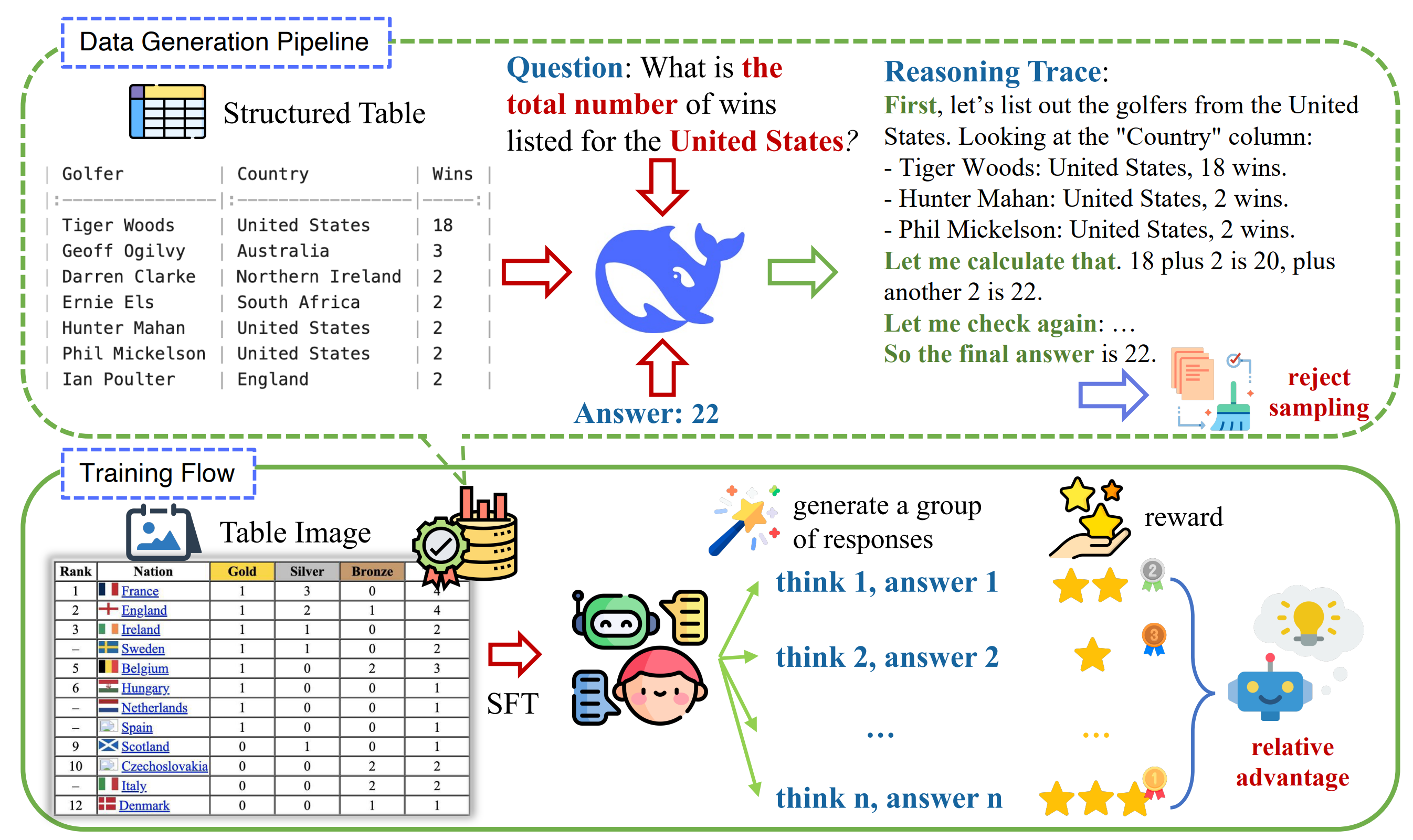

Tabular reasoning involves multi-step information extraction and logical inference over tabular data. While recent advances have leveraged large language models (LLMs) for reasoning over structured tables, such high-quality textual representations are often unavailable in real-world settings, where tables typically appear as images. In this paper, we tackle the task of tabular reasoning from table images, leveraging privileged structured information available during training to enhance multimodal large language models (MLLMs). Experimental results demonstrate that, with limited (9k) data, TURBO achieves state-of-the-art performance (+7.2% vs. previous SOTA) across multiple datasets. |

|

Tabular data, structured as rows and columns, is among the most prevalent data types in machine learning classification and regression applications. Models for learning from tabular data have continuously evolved, with Deep Neural Networks (DNNs) recently demonstrating promising results through their capability of representation learning. In this survey, we systematically introduce the field of tabular representation learning, covering the background, challenges, and benchmarks, along with the pros and cons of using DNNs. We organize existing methods into three main categories according to their generalization capabilities: specialized, transferable, and general models. |

|

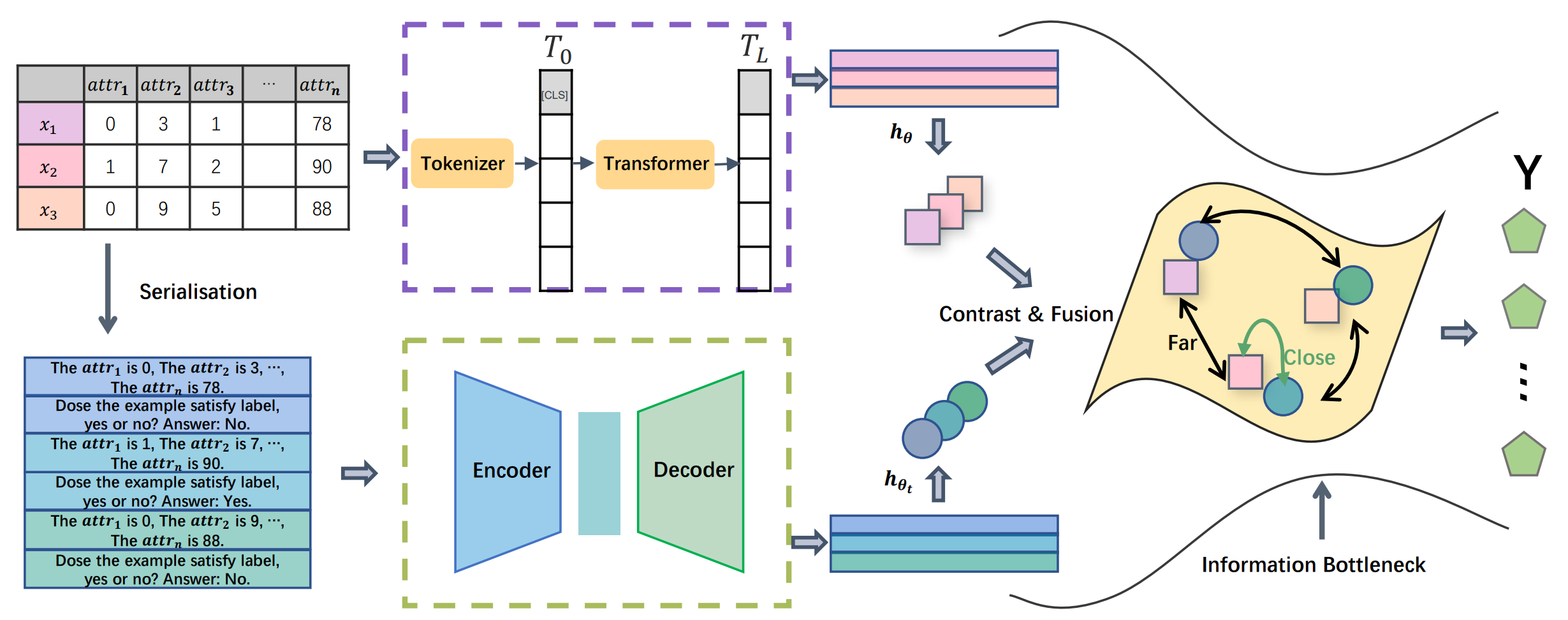

We propose a simple yet effective approach called Tabular TExt Contrastive fusiOn (TABTECO), which aims at maximizing the mutual information within two modalities in a joint space, unlocking the power of text and fusing the discriminative information from two modalities. TABTECO employs contrastive learning to align table and text modalities within a shared latent space, maximizing their mutual information. Additionally, it incorporates the Information Bottleneck principle to strengthen the relationship between this shared space and the label space, enhancing the model’s ability to focus on taskrelevant features. |

Publications - Conference

|

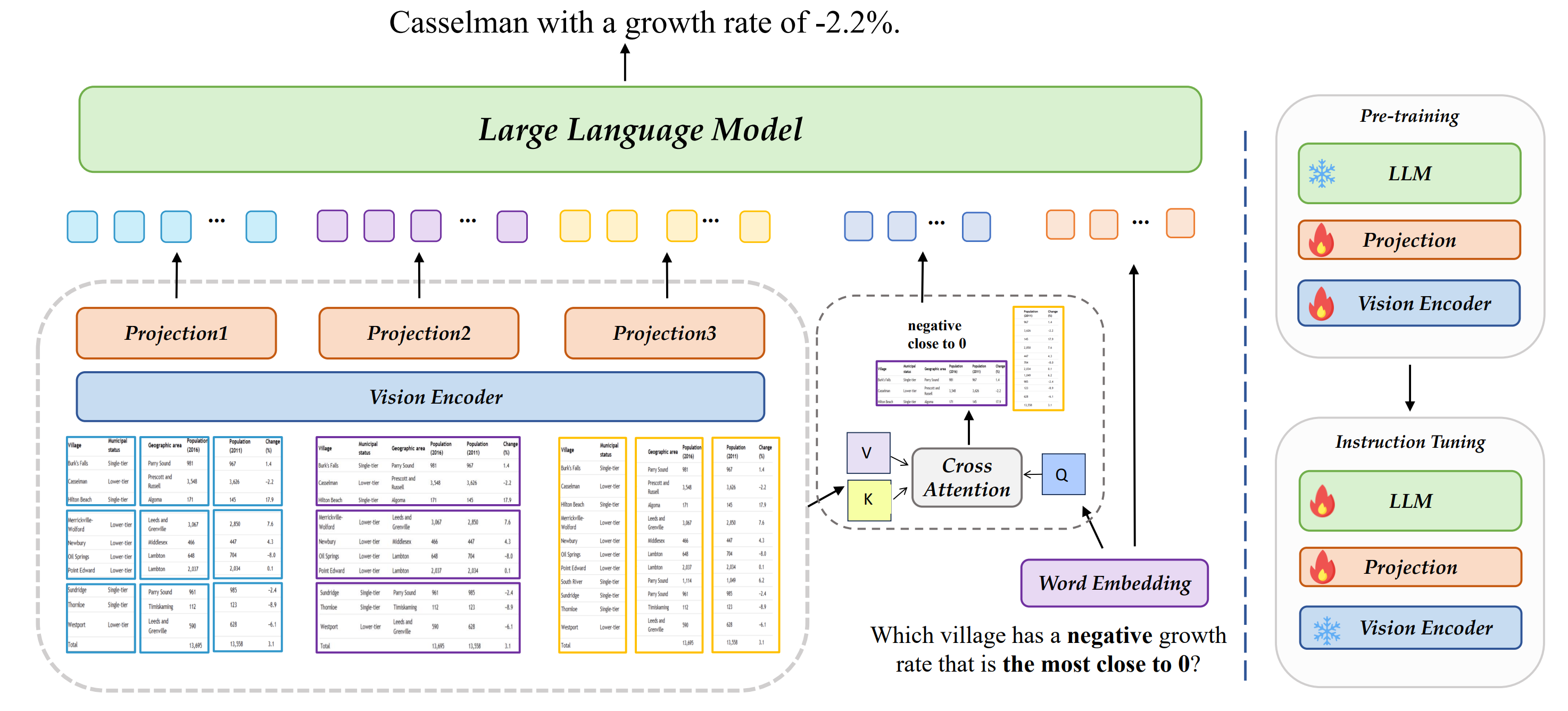

We introduce a new Compositional Condition Tabular Understanding method, called CoCoTab. Specifically, to capture the structural relationships within tables, we enhance the visual encoder with additional row and column patches. Moreover, we introduce the conditional tokens between the visual patches and query embeddings, ensuring the model focuses on relevant parts of the table according to the conditions specified in the query. Additionally, we also introduce the Massive Multimodal Tabular Understanding (MMTU) benchmark, which comprehensively assesses the full capabilities of MLLMs in tabular understanding. |

|

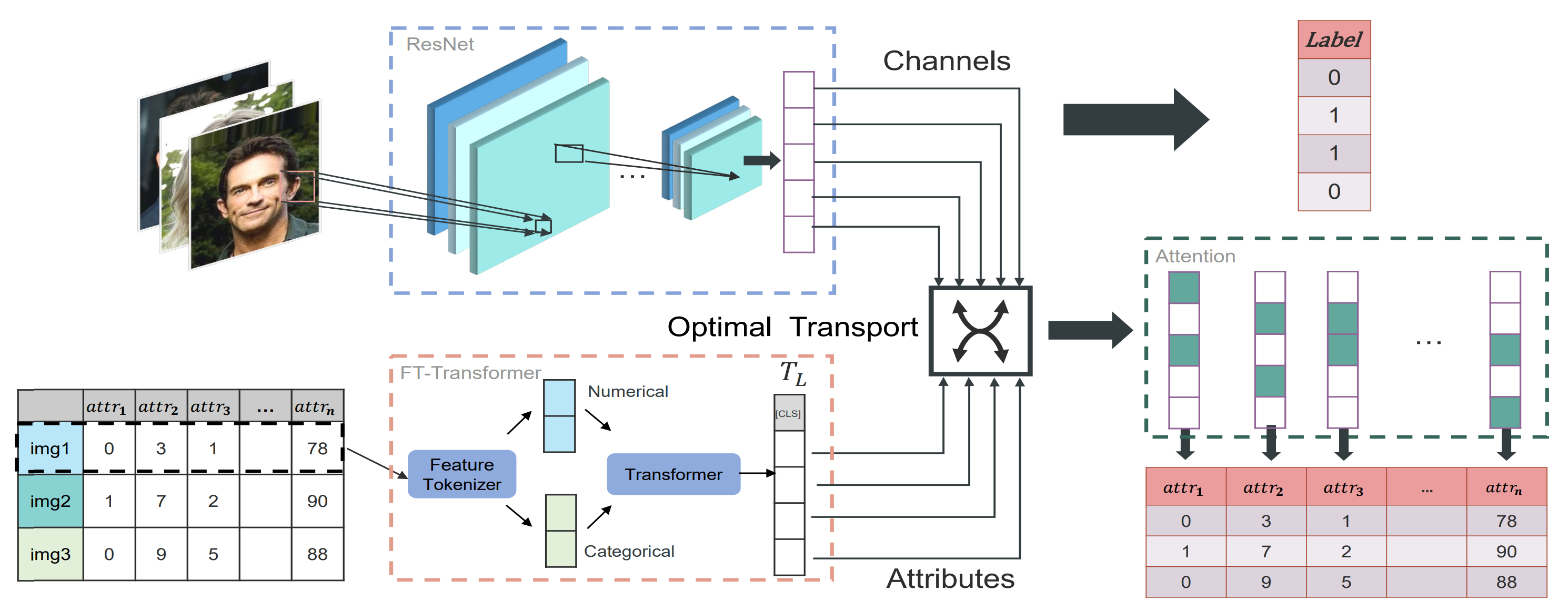

We propose CHannel tAbulaR alignment with optiMal tranSport(CHARMS), which establishes an alignment between image channels and tabular attributes, enabling selective knowledge transfer that is pertinent to visual features. By maximizing the mutual information between image channels and tabular features, knowledge from both numerical and categorical tabular attributes are extracted. Experimental results demonstrate that CHARMS not only enhances the performance of image classifiers but also improves their interpretability by effectively utilizing tabular knowledge. |

Honors

挑战杯“揭榜挂帅”专项赛-中国电信赛道 特等奖, 2024

南京大学栋梁奖学金优秀奖, 2024

2-nd place in IJCAI 2024 Competition - Visually Rich Form Document Intelligence and Understanding: Track B

百度商业AI技术创新大赛特等奖, 2023

华为-南京大学联合实验室优秀学生奖, 2023

挑战杯-小挑 全国铜奖, 2022

Teaching Assistant

Machine Learning Primer. (MOCC, Online, Spring, 2024; Prof. Zhi-Hua Zhou and Prof. Han-Jia Ye)

Introduction to Machine Learning. (For undergraduate students, Autumn, 2023; Prof. Han-Jia Ye)

Introduction to Machine Learning. (For undergraduate students, Spring, 2022; Prof. Han-Jia Ye)

Correspondence

jiangjp@lamda.nju.edu.cn

Mail Address

Jun-Peng Jiang

National Key Laboratory for Novel Software Technology, Nanjing University, Xianlin Campus Mailbox 603,

163 Xianlin Avenue, Qixia District, Nanjing 210023, China

(南京市栖霞区仙林大道163号, 南京大学仙林校区603信箱, 软件新技术国家重点实验室, 210023.)