LAMDA-SSL [Tutorial][Github]:

A comprehensive and easy-to-use toolkit for semi-supervised learning. LAMDA-SSL contains 30+ semi-supervised learning algorithms, including both statiscal and deep semi-supervised learning.

We hope this toolkit can promote the research of semi-supervised learning.

💻 Software

LawGPT [Technical Report][Github]:

LaWGPT 是一系列基于中文法律知识的开源大语言模型。该系列模型在通用中文基座模型(如 Chinese-LLaMA、ChatGLM 等)的基础上扩充法律领域专有词表、大规模中文法律语料预训练,增强了大模型在法律领域的基础语义理解能力。在此基础上,构造法律领域对话问答数据集、中国司法考试数据集进行指令精调,提升了模型对法律内容的理解和执行能力。

LaWGPT 是一系列基于中文法律知识的开源大语言模型。该系列模型在通用中文基座模型(如 Chinese-LLaMA、ChatGLM 等)的基础上扩充法律领域专有词表、大规模中文法律语料预训练,增强了大模型在法律领域的基础语义理解能力。在此基础上,构造法律领域对话问答数据集、中国司法考试数据集进行指令精调,提升了模型对法律内容的理解和执行能力。

📊 Benchmark

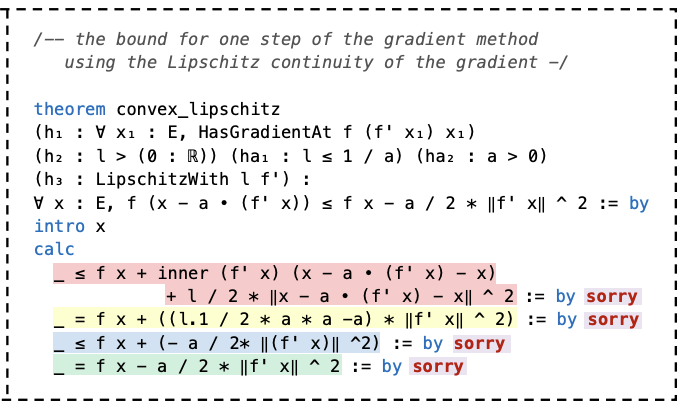

FormalML [Paper][Github][Huggingface]:

LLMs' ability to serve as practical assistants for mathematicians, filling in missing steps within complex proofs, remains underexplored. We identify this challenge as the task of subgoal completion, where an LLM must discharge short but nontrivial proof obligations left unresolved in a human-provided sketch. To study this problem, we introduce FormalML, a Lean 4 benchmark built from foundational theories of machine learning. FormalML is the first subgoal completion benchmark to combine premise retrieval and complex research-level contexts.

LLMs' ability to serve as practical assistants for mathematicians, filling in missing steps within complex proofs, remains underexplored. We identify this challenge as the task of subgoal completion, where an LLM must discharge short but nontrivial proof obligations left unresolved in a human-provided sketch. To study this problem, we introduce FormalML, a Lean 4 benchmark built from foundational theories of machine learning. FormalML is the first subgoal completion benchmark to combine premise retrieval and complex research-level contexts.

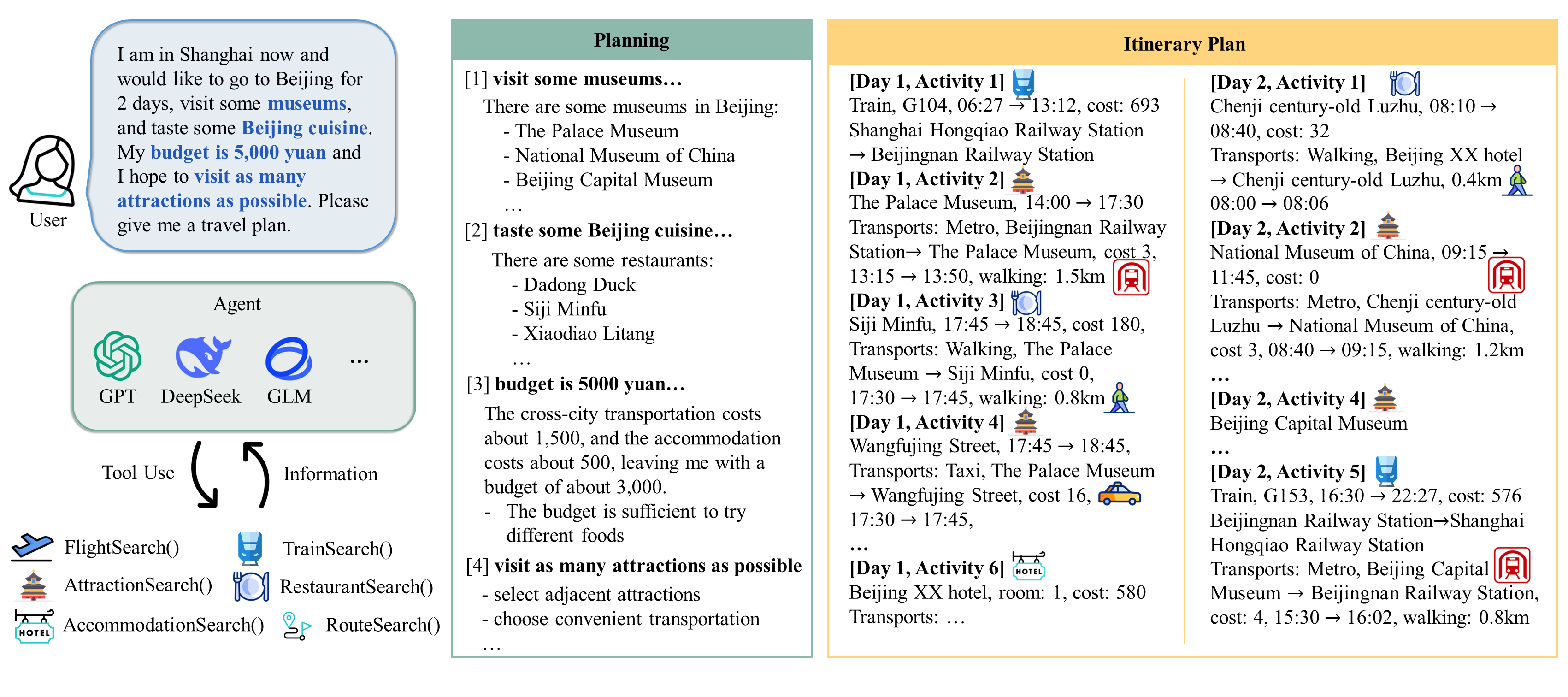

ChinaTravel [Project Page][Paper][Data][Code]:

A benchmark for evaluating the travel planning abilities of AI Agents. Given a query, language agents employ various tools to gather information and plan a multi-day multi-POI itinerary. The agents are expected to provide a feasible and reasonable plan while satisfying the hard logical constraints and soft preference requirements. To provide convenience for global researchers, we provide an English translation of the original Chinese information here.

A benchmark for evaluating the travel planning abilities of AI Agents. Given a query, language agents employ various tools to gather information and plan a multi-day multi-POI itinerary. The agents are expected to provide a feasible and reasonable plan while satisfying the hard logical constraints and soft preference requirements. To provide convenience for global researchers, we provide an English translation of the original Chinese information here.

TabFSBench [Project Page][Paper][LeaderBoard][Code]:

A benchmark for evaluating tabular data learning models in open environments. Current tabular learning research predominantly focuses on closed environments, while in real-world applications, open environments are often encountered, where distribution and feature shifts occur, leading to significant degradation in model performance. TabFSBench evaluates the impacts of four distinct feature-shift scenarios on four tabular model categories across various datasets and assesses the performance of LLMs and tabular LLMs in the tabular benchmark for the first time.

A benchmark for evaluating tabular data learning models in open environments. Current tabular learning research predominantly focuses on closed environments, while in real-world applications, open environments are often encountered, where distribution and feature shifts occur, leading to significant degradation in model performance. TabFSBench evaluates the impacts of four distinct feature-shift scenarios on four tabular model categories across various datasets and assesses the performance of LLMs and tabular LLMs in the tabular benchmark for the first time.

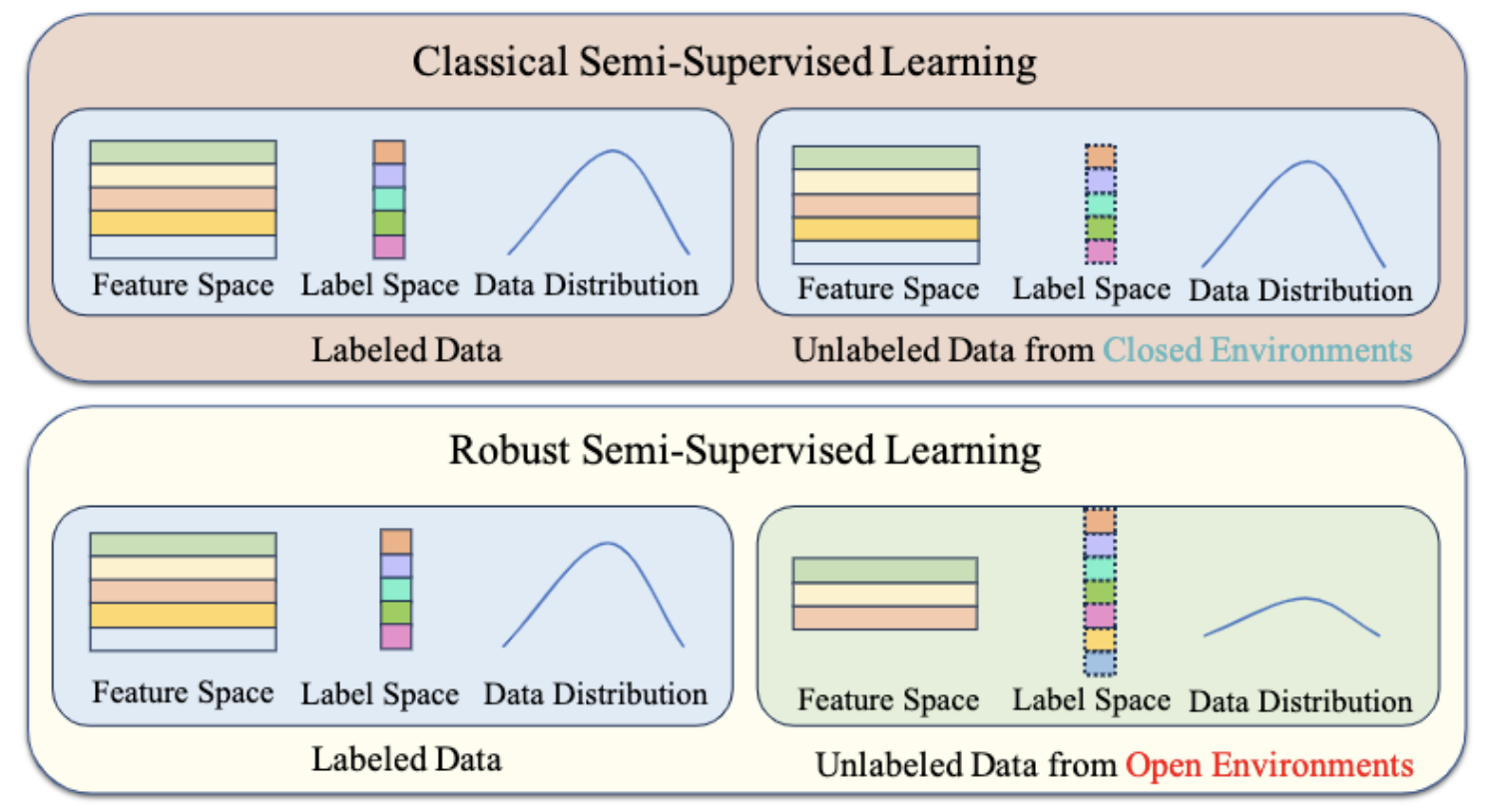

SSL Benchmark [Project Page][Paper][Code]:

A benchmark for evaluating semi-supervised learning models in open environments. We have corrected the misconceptions in previous research on robust SSL and reshaped the research framework of robust SSL by introducing new analytical methods and associated evaluation metrics from a dynamic perspective. We build a benchmark that encompasses three types of open environments: inconsistent data distributions, inconsistent label spaces, and inconsistent feature spaces to assess the performance of widely used statistical and deep SSL algorithms with tabular, image, and text datasets.

A benchmark for evaluating semi-supervised learning models in open environments. We have corrected the misconceptions in previous research on robust SSL and reshaped the research framework of robust SSL by introducing new analytical methods and associated evaluation metrics from a dynamic perspective. We build a benchmark that encompasses three types of open environments: inconsistent data distributions, inconsistent label spaces, and inconsistent feature spaces to assess the performance of widely used statistical and deep SSL algorithms with tabular, image, and text datasets.