Exploit Bounding Box Annotations for Multi-label Object Recognition

Hao Yang, Joey Tiany Zhou, Yu Zhang,

Bin-Bin Gao, Jianxin Wu and Jianfei Cai

In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (

CVPR 2016), Las Vegas, NV, USA, June 2016, pp.280-288.

[

Paper ]

[

Abstrcat ]

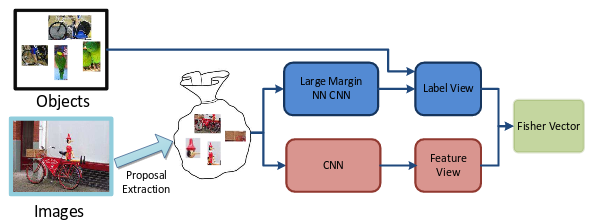

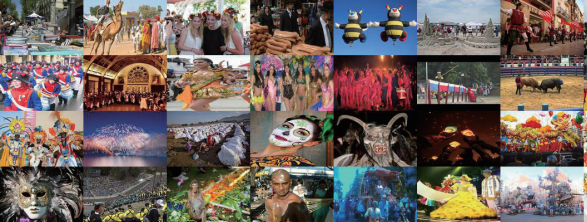

Convolutional neural networks (CNNs) have shown

great performance as general feature representations for

object recognition applications. However, for multi-label

images that contain multiple objects from different categories,

scales and locations, global CNN features are not

optimal. In this paper, we incorporate local information

to enhance the feature discriminative power. In particular,

we first extract object proposals from each image. With

each image treated as a bag and object proposals extracted

from it treated as instances, we transform the multi-label

recognition problem into a multi-class multi-instance learning

problem. Then, in addition to extracting the typical

CNN feature representation from each proposal, we propose

to make use of ground-truth bounding box annotations

(strong labels) to add another level of local information

by using nearest-neighbor relationships of local regions to

form a multi-view pipeline. The proposed multi-view multiinstance

framework utilizes both weak and strong labels

effectively, and more importantly it has the generalization

ability to even boost the performance of unseen categories

by partial strong labels from other categories. Our framework

is extensively compared with state-of-the-art handcrafted

feature based methods and CNN based methods on

two multi-label benchmark datasets. The experimental results

validate the discriminative power and the generalization

ability of the proposed framework. With strong labels,

our framework is able to achieve state-of-the-art results in

both datasets.

[

BibTeX ]

@inproceedings{yang2016exploit,

title={Exploit bounding box annotations for multi-label object recognition},

author={Yang, Hao and Zhou, Joey Tianyi and Zhang, Yu and Gao, Bin-Bin and Wu, Jianxin and Cai, Jianfei},

booktitle={Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition},

pages={280--288},

year={2016}

}

[

CCF-A]