Su Lu @ LAMDA, NJU-CS

|

Su Lu Ph.D. Candidate LAMDA Group Department of Computer Science & Technology Nanjing University, Nanjing 210023, China. Email: lus [at] lamda.nju.edu.cn |

|

|

Short Bio

Su received his B.Sc. degree from Northwestern Polytechnical University in June 2018 (GPA ranked 1 / 183).

After that, he became an M.Sc. student in the LAMDA Group led by Professor Zhi-Hua Zhou in Nanjing University.

From September 2020, Su started his Ph.D. degree in machine learning under the supervision of Professor De-Chuan Zhan and Professor Lijun Zhang.

Main Research Interests

Su mainly focuses on machine learning, especially:

Meta-Learning

Meta-Learning, or learning to learn, aims at extracting meta-knowledge from previous tasks, and reuse them in new tasks. It can be applied to few-shot learning, federated learning, hyper-parameter setting, and other related areas.

Knowledge Distillation

Knoweldge distillation, which utilizes a well-trained teacher model to assist student model, often accelerates training process or improves model performance. This technology can be also used in model compression and other applications.

Publications - Preprints

|

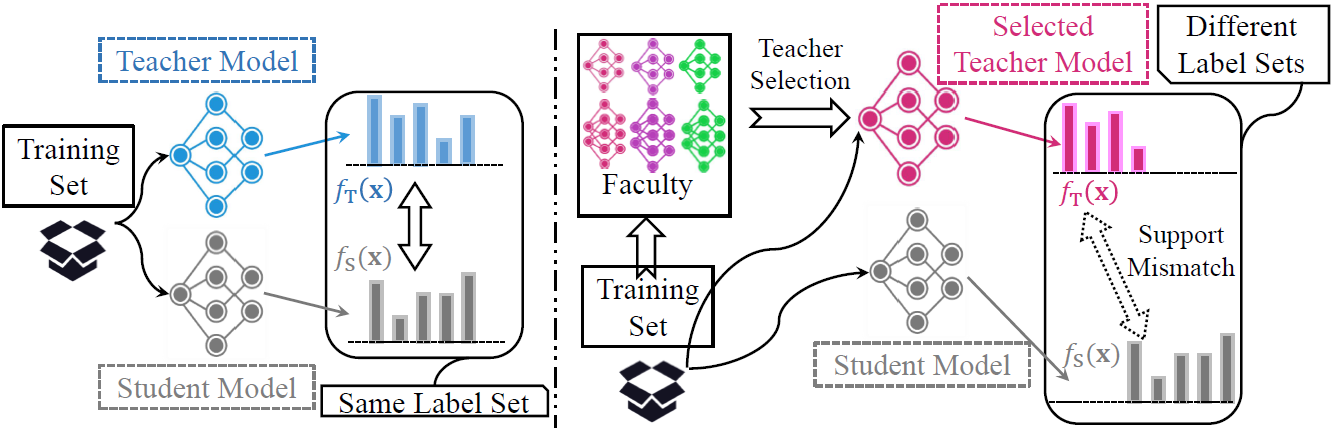

This paper studies a new knowledge distillation paradigm called 'Faculty Distillation', which means selecting the most suitable teacher model from a group of ones and performing generalized knowledge reuse. |

|

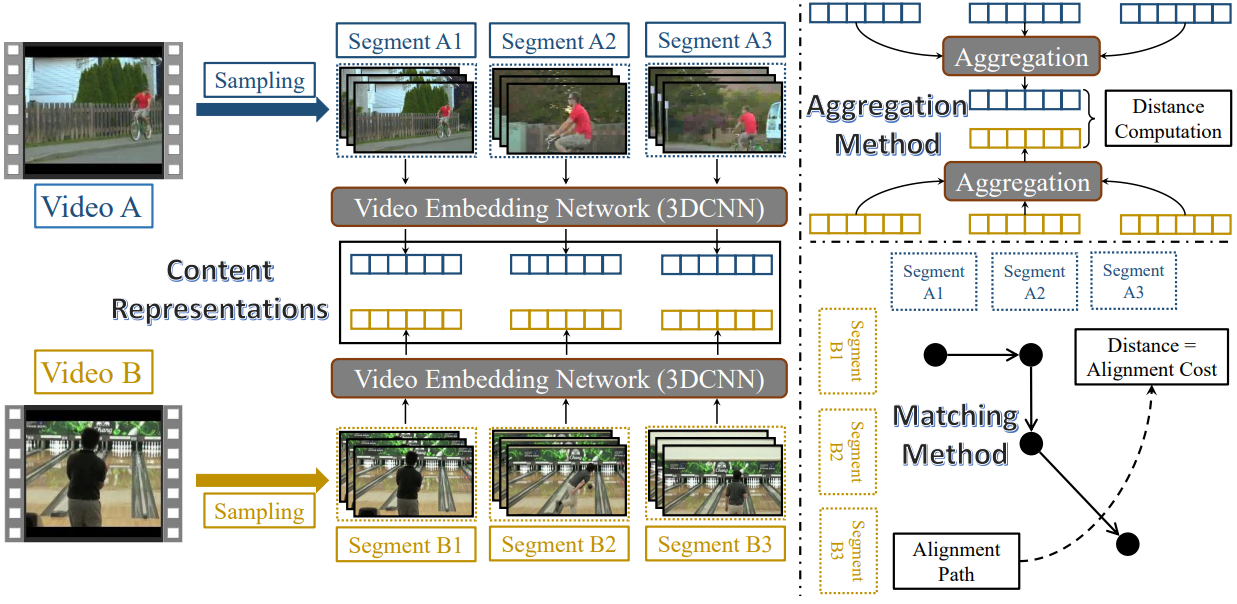

In this paper, we propose a compromised video metric which simultaneously considers and balances long-term and short-term temporal relations in videos. We define the distance between two videos as the optimal transportation cost between their segment sequences, achieving promising performance in few-shot action recognition. |

Publications - Conference Papers

|

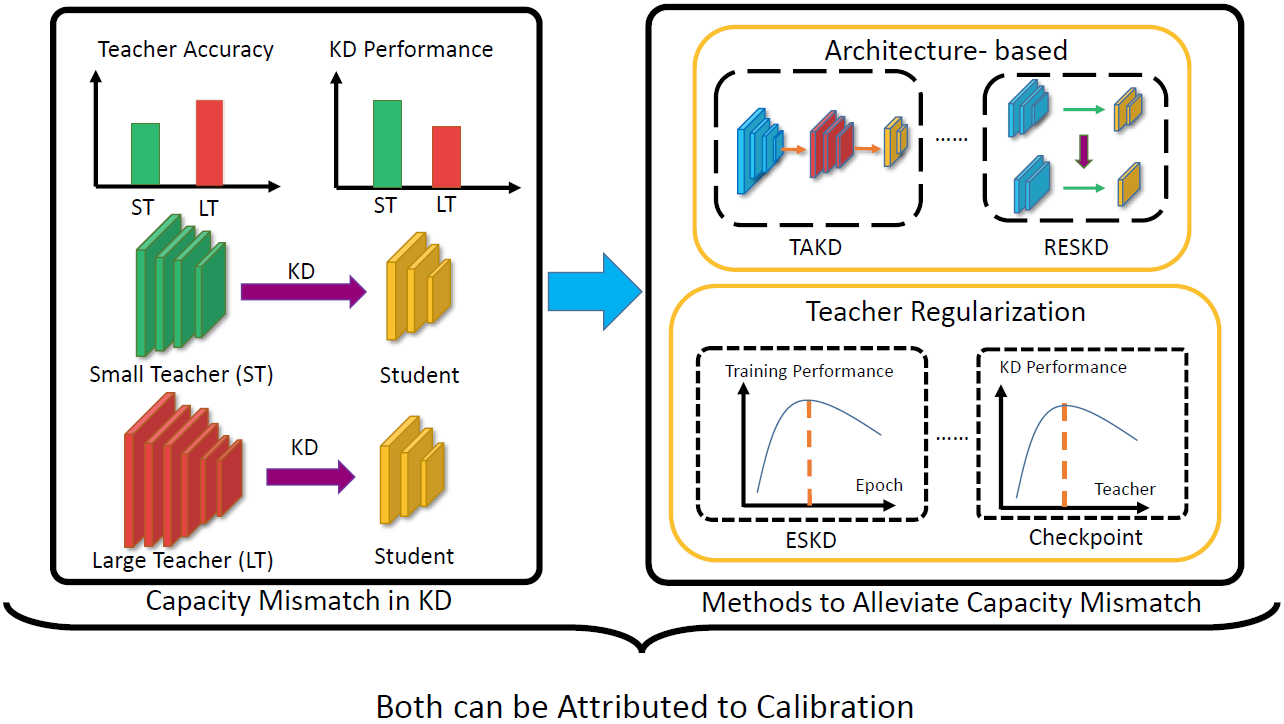

A counter-intuitive phenomenon known as capacity mismatch in knowledge distillation has been identified, wherein KD performance may not be good when a better teacher instructs the student. In this paper, we propose a unifying analytical framework to pinpoint the core of capacity mismatch based on calibration. |

|

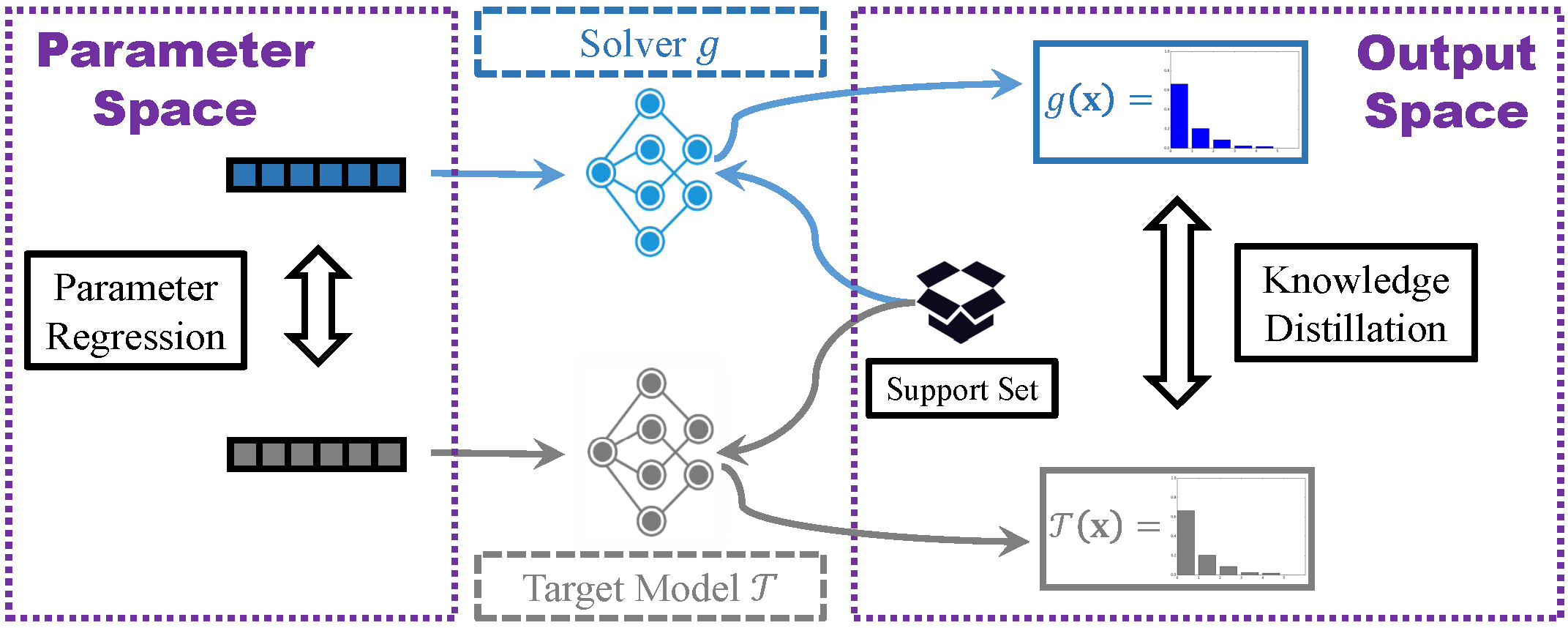

The widely adopted support-query protocol in meta-learning suffers from biased and noisy sampling of query instances. As an alternative, we evaluate the base model by measuring its distance to a target model. We show that a small number of target models can improve classic meta-learning algorithms. |

|

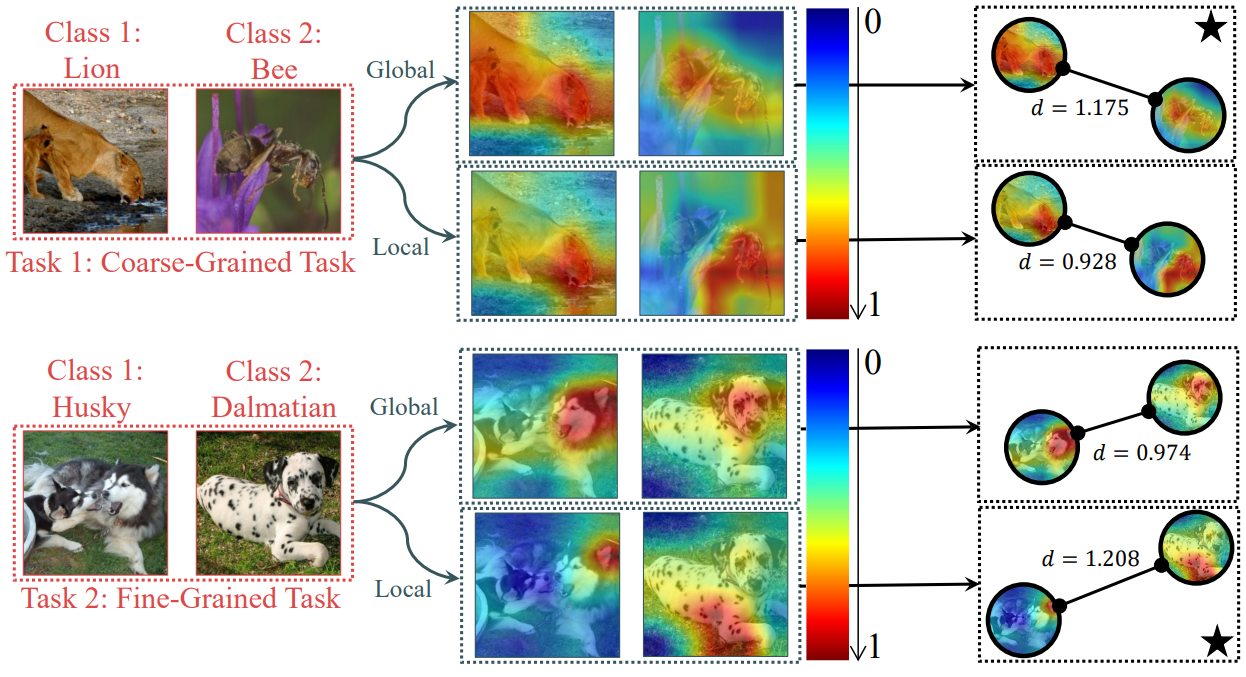

Task-adaptive representation is beneficial to metric-based few-shot learning algorithms. In this paper, we claim that class-specific local transformation helps to improve the representation ability of feature adaptor, especially for heterogeneous tasks. |

|

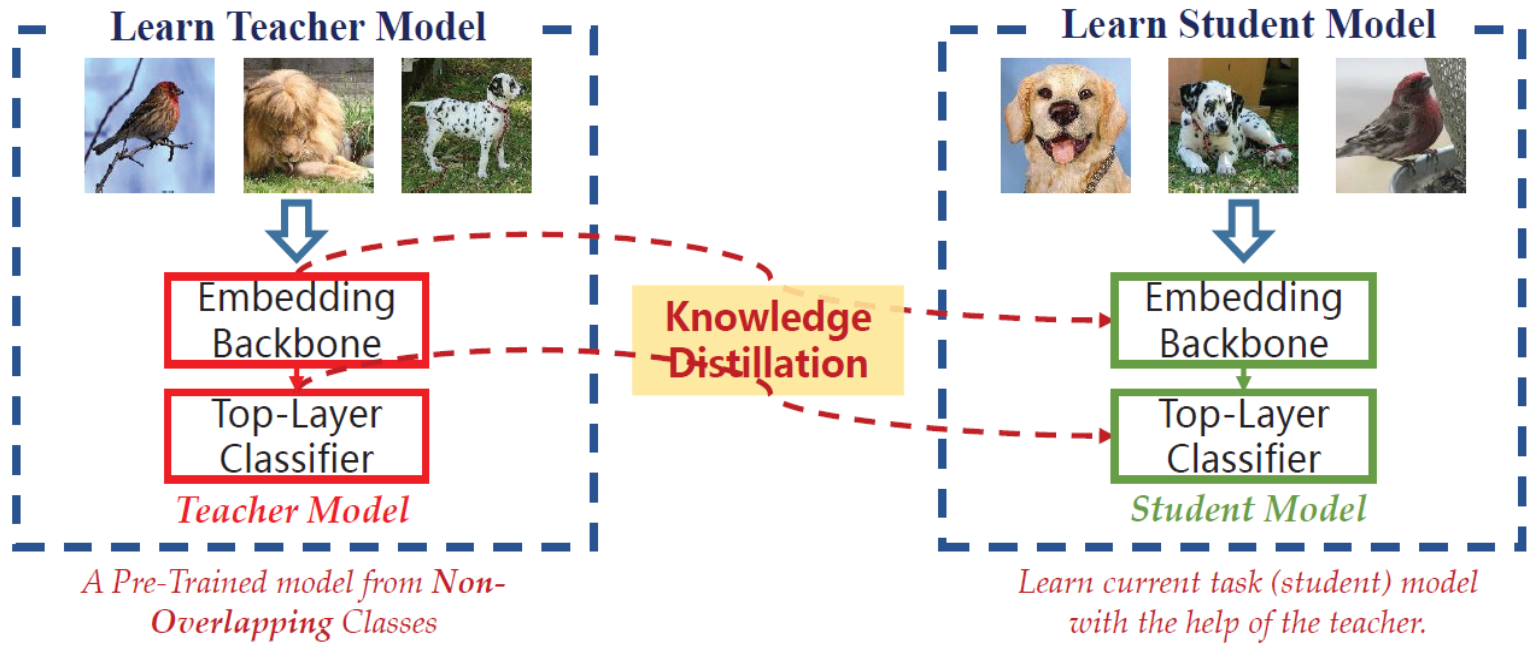

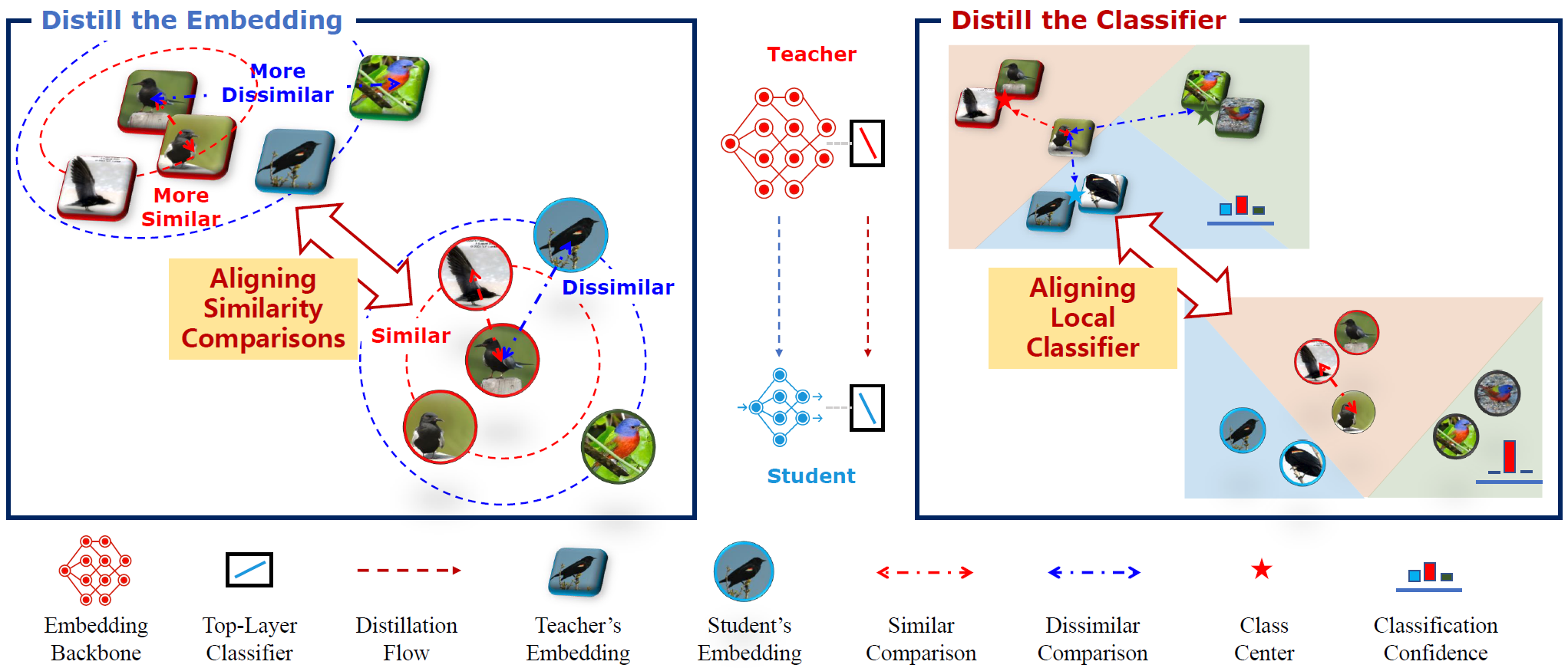

To reuse cross-task knowledge, we distill the comparison ability and the local classification ability of the embedding and the top-layer classifier from a teacher model, respectively. Our proposed method can be also applied to standard knowledge distillation and middle-shot learning. |

|

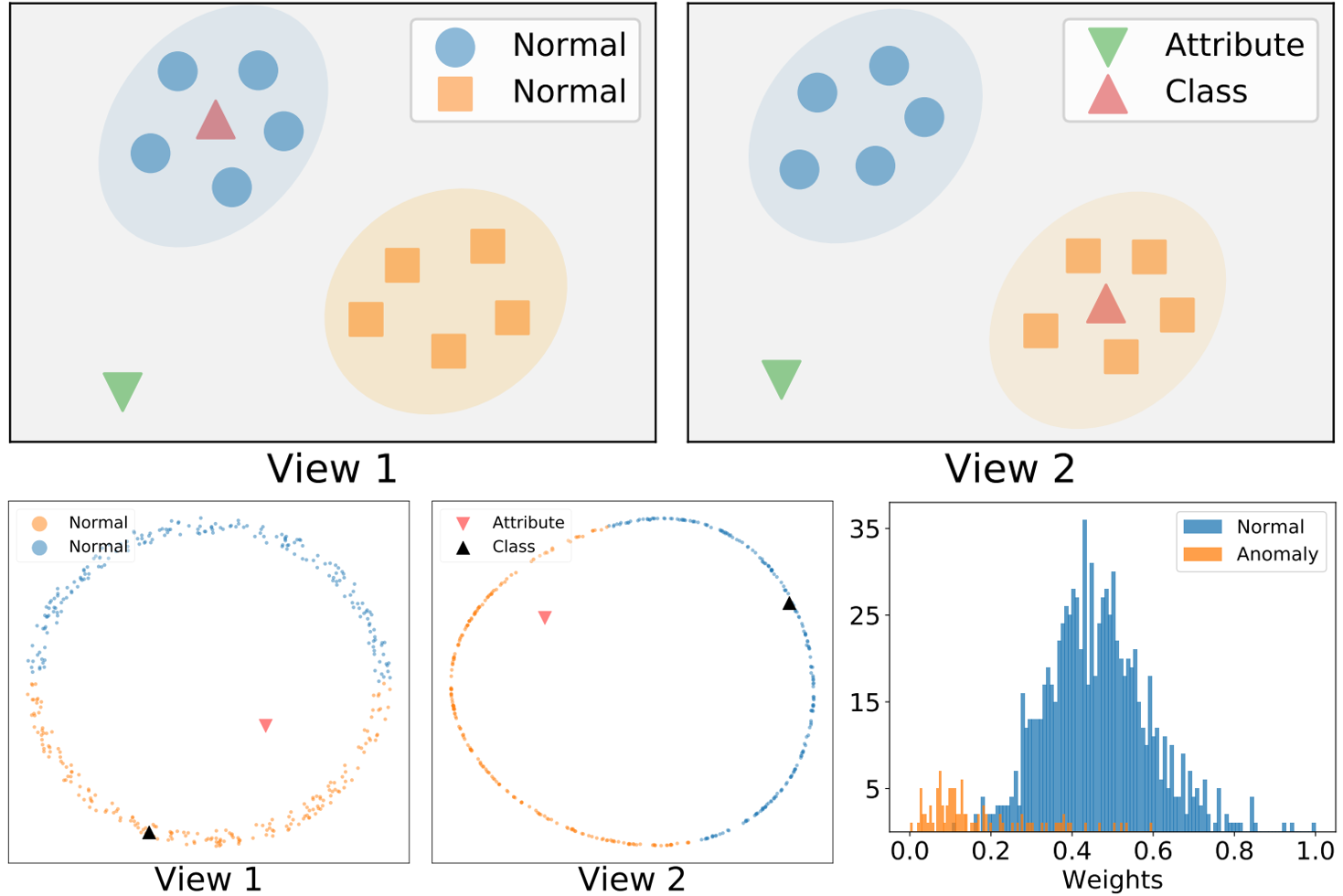

We study the problem of multi-view anomaly detection and propose a novel neighbor-based method. Our method can simultaneously detect dissension anomaly and unanimous anomaly without relying on clustering assumption. |

Publications - Journal Articles

|

We study the problem of distilling knowledge from a generalized teacher, whose label space may be same, overlapped, or totally different from the student's. The comparison ability of teacher is reused to bridge different label spaces. |

Academic and Industrial Projects

Quant Research Internship

Party A: Baiont Quant

Su focused technical problems in quantitative trading.

Some Project

Party A: Science and Technology Commission, Central Military Commission

Su is the project leader.

Technical Insight Internship

Party A: Department of Strategic Investment, Huawei

Su discerned technical innovation trends in AI and dynamics of cutting‑edge technologies.

App Usage Prediction and Preloading

Party A: Consumer Business Group, Huawei

This technology has been launched in Harmony OS service of HUAWEI P40.

Awards & Honors & Contests

Tencent Scholarship, 2021

First-Class Academic Scholarship of Nanjing University, 2020 and 2019

CCF Elite Collegiate Student Award, 2018

ACM-ICPC Asia Regional Contest Silver Medal, 2017

ACM-ICPC China Invitational Contest Silver Medal, 2017

Interdisciplinary Contest in Modeling Meritorious Winner, 2017

First-Class Scholarship of Northwestern Polytechnical University, 2017, 2016, and 2015

China Collegiate Programming Contest Silver Medal, 2016

ACM-ICPC Asia Regional Contest Bronze Medal, 2016

National Scholarship for Undergraduates, 2016 and 2015

Academic Service

Conference PC Member/Reviewer: IJCAI-ECAI'20, AAAI'21, CVPR'21, KDD'21, ICCV'21, AAAI'22, CVPR'22, ICML'22

Journal Reviewer: SCIENTIA SINICA Informationis

Teaching Assistant

Introduction to Machine Learning. (For undergraduate students, Spring, 2022)

Digital Singal Processing. (For undergraduate and graduate students, Autumn, 2021)

Introduction to Machine Learning. (For undergraduate students, Spring, 2021)

Digital Singal Processing. (For undergraduate and graduate students, Autumn, 2020)

Correspondence

Email: lus [at] lamda.nju.edu.cn

Office: Yifu Building A201, Xianlin Campus of Nanjing University

Address: Su Lu

National

Key Laboratory for Novel Software Technology

Nanjing

University, Xianlin Campus

163

Xianlin Avenue, Qixia District, Nanjing 210023, China